Combine web scraping and Google Calendar to automate data collection and event management. Here's what you need to know:

- Web scraping: Automatically collect data from websites

- Google Calendar API: Add scraped data as calendar events

- Automation: Schedule scraping and calendar updates

Key benefits:

- Save time on manual data entry

- Keep your calendar always up-to-date

- Centralize information from multiple sources

To get started:

- Set up Google Calendar API access

- Choose a web scraping tool (e.g., Scrapy, Beautiful Soup)

- Install Python and required libraries

- Plan your workflow and data structure

- Build your scraper and integrate with Google Calendar

- Automate the process with scheduling tools

| Component | Function |

|---|---|

| Web Scraper | Collects data from websites |

| Google Calendar API | Manages calendar events |

| Automation Script | Links scraped data to calendar |

| Scheduler (e.g., Cron) | Runs scraper regularly |

Remember to follow website rules, handle API limits, and implement error handling for best results.

Related video from YouTube

What You Need to Start

To combine web scraping with Google Calendar, you'll need three things:

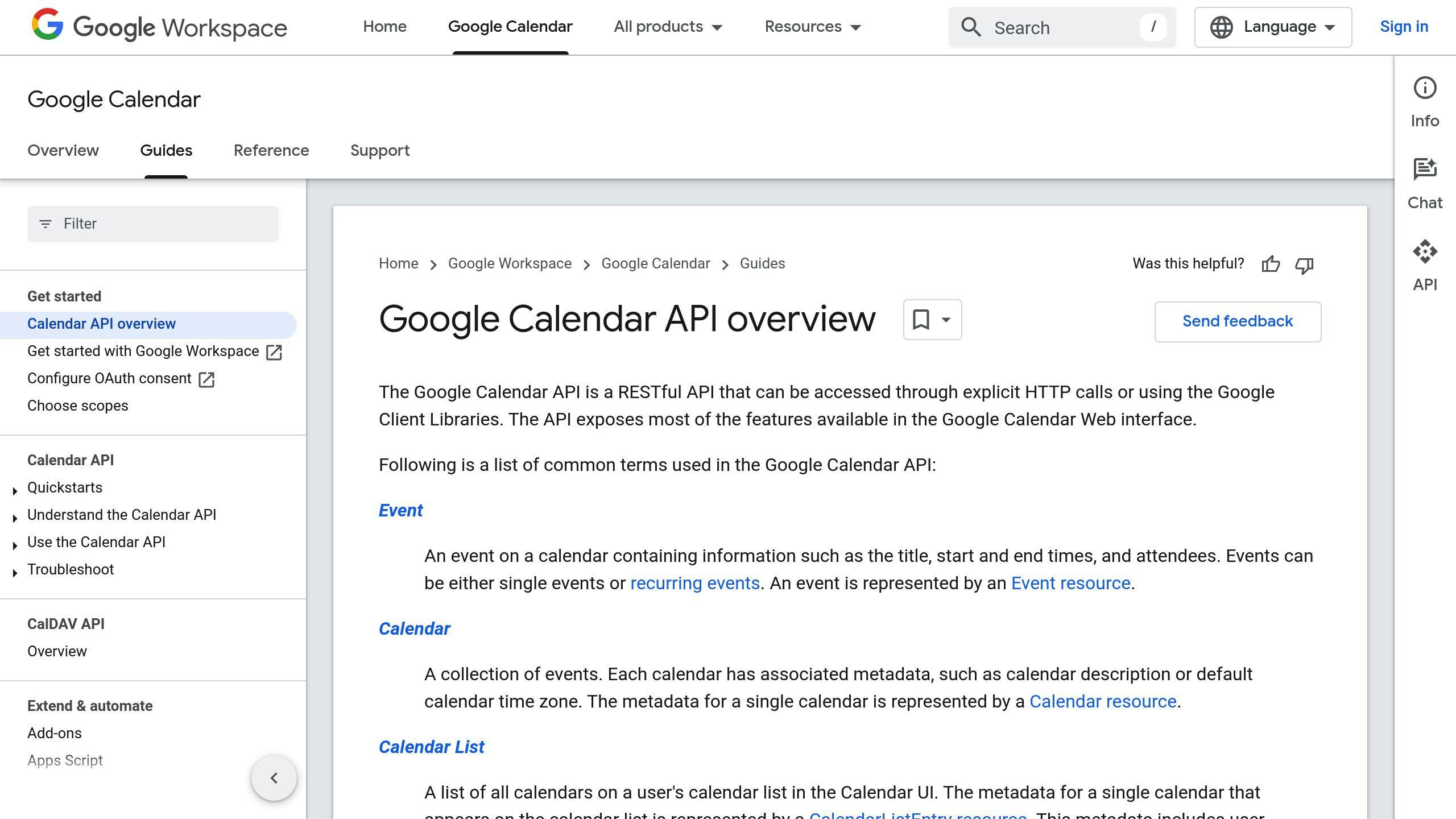

1. Google Calendar API access

Head to the Google Cloud Console. Make a new project, turn on the Google Calendar API, and get your OAuth 2.0 client ID. Don't forget to download the client config file.

2. Web scraping tools

Pick your poison:

| Tool | Type | Best For |

|---|---|---|

| Scrapy | Framework | Big projects |

| Beautiful Soup | Library | Simple sites |

| Selenium | Automation tool | JavaScript-heavy sites |

3. Python setup

Grab Python from python.org. When installing, check "Add Python to PATH". To make sure it worked, type python --version in your command prompt.

Now, install the libraries you'll need:

pip install google-auth-oauthlib google-auth-httplib2 google-api-python-client

pip install scrapy beautifulsoup4 selenium

And you're good to go!

Planning Your Workflow

To integrate web scraping with Google Calendar, you need a solid plan. Here's how to do it:

Choose Your Data

Pick what you want to scrape and how often. Maybe it's:

- Conference events

- Class schedules

- Project deadlines

Scrape as often as the source updates. A conference site? Monthly might do. Class schedule? Weekly could be better.

Link Data to Calendar

Turn your scraped info into calendar events. Think about:

- Title: Main topic

- Date and time: Get the format right

- Location: Where it's happening

- Description: Extra details

Here's how it might look:

| Scraped | Calendar Event |

|---|---|

| "Intro to Python" | Title |

| "2023-09-15 14:00" | Start Time |

| "Room 101, CS Building" | Location |

| "Bring laptop. No prerequisites." | Description |

Watch out for different date formats. Your scraper should handle "September 15, 2023" and "15/09/2023" alike.

"I spent 12 hours building a course schedule scraper. Manual input might've been faster, but the scraper saves time long-term", says a student who automated their schedule.

For smooth sailing:

- Test your scraper thoroughly

- Set up error handling

- Use a database to review data before it hits your calendar

Building the Scraper

Let's dive into creating our scraper. We'll use Python with Requests and Beautiful Soup to grab and parse web data.

Basic Scraping Script

Here's how to build a simple scraper:

1. Set up

Install what you need:

pip install requests beautifulsoup4 pandas

2. Write the code

Here's a script to scrape top IMDb movies:

import requests

from bs4 import BeautifulSoup

import pandas as pd

import time

url = "https://www.imdb.com/chart/top"

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

movies = []

for row in soup.select('tbody.lister-list tr'):

title = row.find('td', class_='titleColumn').find('a').get_text()

year = row.find('td', class_='titleColumn').find('span', class_='secondaryInfo').get_text()[1:-1]

rating = row.find('td', class_='ratingColumn imdbRating').find('strong').get_text()

movies.append([title, year, rating])

df = pd.DataFrame(movies, columns=['Title', 'Year', 'Rating'])

time.sleep(1)

df.to_csv('top-rated-movies.csv', index=False)

This script grabs IMDb's top movies, pulls out titles, years, and ratings, then saves it all to a CSV file.

Handling Errors

Scraping can hit snags. Here's how to deal with common issues:

1. HTTP Errors

Use a try-except:

import requests

url = 'https://www.example.com'

try:

response = requests.get(url)

response.raise_for_status()

except requests.exceptions.HTTPError as err:

print(f'HTTP error occurred: {err}')

2. Connection Errors

Set up a session with auto-retries:

import requests

from requests.adapters import HTTPAdapter

from requests.packages.urllib3.util.retry import Retry

session = requests.Session()

retries = Retry(total=3, backoff_factor=1, status_forcelist=[500, 502, 503, 504])

session.mount('https://', HTTPAdapter(max_retries=retries))

try:

response = session.get(url)

except requests.exceptions.ConnectionError as err:

print(f'Connection error occurred: {err}')

3. Parsing Errors

Check if data looks right:

try:

title = row.find('td', class_='titleColumn').find('a').get_text()

except AttributeError:

print(f"Couldn't find title in row: {row}")

title = "N/A"

4. Rate Limiting

Add delays between requests:

import time

import random

time.sleep(random.uniform(1, 3)) # Random 1-3 second delay

Using Google Calendar

Want to add scraped data to your Google Calendar automatically? Here's how:

Logging into Google Calendar API

First, you need to set up access:

- Create a Google Cloud project

- Turn on the Calendar API

- Set up OAuth 2.0 credentials

Then use this code to log in:

from google_auth_oauthlib.flow import InstalledAppFlow

from googleapiclient.discovery import build

SCOPES = ['https://www.googleapis.com/auth/calendar']

flow = InstalledAppFlow.from_client_secrets_file('client_secret.json', SCOPES)

creds = flow.run_local_server(port=0)

service = build('calendar', 'v3', credentials=creds)

This opens a browser for you to allow access. Keep those credentials safe!

Adding Events to the Calendar

Now you can create events from your scraped data:

event = {

'summary': 'Web Scraping Results',

'description': 'Latest data from scraping run',

'start': {

'dateTime': '2023-06-01T09:00:00',

'timeZone': 'America/Los_Angeles',

},

'end': {

'dateTime': '2023-06-01T10:00:00',

'timeZone': 'America/Los_Angeles',

},

}

event = service.events().insert(calendarId='primary', body=event).execute()

print(f"Event created: {event.get('htmlLink')}")

Remember:

- Use ISO format for dates/times

- Set the right timezone

- Put your scraped data in the description

- Use 'primary' for the main calendar

And that's it! You're now a Google Calendar API pro.

sbb-itb-00912d9

Making It Run Automatically

Want your web scraping and Google Calendar integration to work on its own? Let's set it up with scheduling tools.

Using Cron Jobs

Cron jobs are perfect for scheduling tasks on Unix systems. They run your scripts at set times without you lifting a finger.

Here's how to set one up:

- Open your terminal

- Type

crontab -e - Add a new line with your schedule and script path

For example, to run your script daily at 9 AM:

0 9 * * * /usr/bin/python3 /path/to/your/script.py

Quick tips:

- Use absolute file paths

- Set up logging

- Test your script before scheduling

Need something more robust? Try cloud-based options like Google Cloud Scheduler.

Here's a quick comparison:

| Method | Pros | Cons |

|---|---|---|

| Cron Jobs | Free, built-in | Local machine only |

| Cloud Scheduler | Scalable, managed | Possible costs |

Python's sched |

In-script solution | Needs constant runtime |

Pick the method that fits your needs and budget. Happy automating!

Updating and Fixing Conflicts

When you're scraping web data for Google Calendar, you'll need to manage events as new info comes in. Here's how to handle updates and fix conflicts:

Changing Existing Events

To keep your calendar fresh with scraped data:

- Use Google Calendar API to find and tweak events

- Set up auto-triggers for updates

Here's a Google Apps Script that updates events when data in a Google Sheet changes:

function updateCalendarEvent() {

var sheet = SpreadsheetApp.getActiveSpreadsheet().getActiveSheet();

var calendar = CalendarApp.getCalendarById('your_calendar_id');

var title = sheet.getRange("C4").getValue();

var startTime = sheet.getRange("E4").getValue();

var endTime = sheet.getRange("F4").getValue();

var events = calendar.getEvents(startTime, endTime, {search: title});

if (events.length > 0) {

events[0].setTime(startTime, endTime);

} else {

calendar.createEvent(title, startTime, endTime);

}

}

This script checks for existing events and updates them or creates new ones based on sheet data.

Fixing Mismatches

When scraped data doesn't line up with calendar entries:

- Compare scraped data with existing events

- Spot the differences

- Apply a fix-it strategy

Here's a Python script using the Google Calendar API to handle mismatches:

from googleapiclient.discovery import build

def fix_mismatches(scraped_events, service):

for event in scraped_events:

existing_events = service.events().list(

calendarId='primary',

q=event['summary']

).execute().get('items', [])

if existing_events:

update_event(existing_events[0], event, service)

else:

create_event(event, service)

def update_event(existing, new, service):

existing['start'] = new['start']

existing['end'] = new['end']

service.events().update(

calendarId='primary',

eventId=existing['id'],

body=existing

).execute()

def create_event(event, service):

service.events().insert(calendarId='primary', body=event).execute()

This script compares scraped events with existing ones, updating or creating as needed.

Pro tip: Handle the Free/Busy status of events to avoid scheduling conflicts. In Google Calendar, open the event settings, choose 'Free' or 'Busy' status, and click Save.

Tips for Better Results

Want your web scraping and Google Calendar integration to run like a dream? Here's how:

Follow Website Rules

Don't be that person who ignores the rules. Here's what to do:

- Check the robots.txt file. It's like the website's rulebook.

- Stick to the Terms of Service. No one likes a lawsuit.

- Space out your requests. Give servers a breather with a 10-15 second delay between scrapes.

"Web scraping should be discreet, comply with site terms of service, check the robots.txt protocol, and avoid scraping personal data and secret information." - Alexandra Datsenko, Content Creator

Handle API Limits

Google Calendar API has limits. Here's how to play nice:

- Scrape during quiet hours. Less traffic, fewer problems.

- Use multiple IPs. Spread the love across at least 10 addresses.

- Mix up your request timing. Be unpredictable, like a human.

| Tip | Why It Matters |

|---|---|

| Use APIs when possible | More reliable than scraping |

| Keep an eye on usage | Stay within limits |

| Cache data | Less API calls = happy servers |

Fixing Common Problems

Let's tackle the main issues you might face when combining web scraping with Google Calendar.

Scraping Errors

Web scraping can be tricky. Here's how to handle common problems:

1. HTTP Errors

| Error | Meaning | Fix |

|---|---|---|

| 404 | Page not found | Check URLs, add validation |

| 403 | Access forbidden | Rotate IPs, set User-Agent headers |

| 429 | Too many requests | Respect rate limits, add delays |

2. Parsing Errors

Can't extract data? Try these:

- Use BeautifulSoup or Scrapy

- Update scripts for website changes

- Add error handling and logging

3. Data Quality Issues

For accurate data:

- Use validation tools

- Remove duplicates

- Set alerts for odd formats

API Issues

Google Calendar API can be tricky. Here's how to fix common problems:

1. Authentication Errors

If you see "Sorry, but we ran into an error loading this page":

- Get a new secret key from Google Developer Console

- Use the new key in your API call

2. Expired Tokens

Google Calendar tokens don't last long. To fix:

- Add token refresh to your code

- Store and update tokens safely

3. Rate Limiting

To avoid hitting API limits:

- Cache data when you can

- Use exponential backoff for retries

"It happens because the token lifetime is very short and it expires almost immediately after you make any changes in your bubble app." - Kate, Zeroqode Support/QA

4. Debugging API Calls

For better troubleshooting:

- Test API requests with

curl -v - Log API errors on the client-side

Each error has a code, message, and details. Use these to fix issues fast.

Extra Features

Let's explore some extra features to boost your web scraping and Google Calendar setup.

Setting Up Alerts

Stay on top of calendar changes with these notification options:

1. Zapier automation

Connect Google Calendar to other apps:

| Trigger | Action |

|---|---|

| New event | SMS notification |

| Updated event | Slack message |

| Deleted event | Trello card |

2. Email notifications

Use Google Calendar's built-in feature:

- Open Calendar

- Settings (gear icon)

- "Event settings" > Set preferences

3. Google Calendar API

For custom notifications, use the API. Requires coding but offers more flexibility.

Personalizing Event Details

Make your calendar events more informative with scraped data:

1. Add source URLs

Include the scraped website URL in event descriptions for easy reference.

2. Use specific event titles

Instead of "Company X Earnings Call", try "Company X Q2 Earnings - EPS $1.23".

3. Color-code events

Quick-identify event types:

| Event Type | Color |

|---|---|

| Earnings calls | Red |

| Product launches | Blue |

| Conferences | Green |

4. Auto-add attendees

Include scraped speaker or attendee info for better networking.

Wrap-Up

Combining web scraping with Google Calendar is a game-changer for data collection and event management. It's like having a personal assistant who never sleeps, always keeping your calendar fresh with the latest info.

Here's why it's so cool:

- It grabs data automatically (no more manual copy-paste)

- Your calendar's always up-to-date

- You get more time for the fun stuff

Want to make it work like a charm? Do this:

1. Pick a web scraping tool that fits your needs like a glove.

2. Set it up to scrape on a schedule. Think of it as teaching your computer to do your homework while you sleep.

3. Add some error-catching. It's like a safety net for your data.

4. Use content change detection. Why scrape if nothing's new, right?

Just remember: play nice with websites. Don't go overboard with scraping or you might get the digital equivalent of a "No Trespassing" sign.

Get this setup humming, and you'll wonder how you ever lived without it.

| Part | What it does |

|---|---|

| Web Scraping Tool | Your data detective |

| Google Calendar API | Your event organizer |

| Automation Script | The matchmaker between data and calendar |

| Cron Job | Your reliable alarm clock for scraping |